This week I’ve been looking at Shader Variant Stripping while using a Custom Scriptable Rendering Pipeline (SRP).

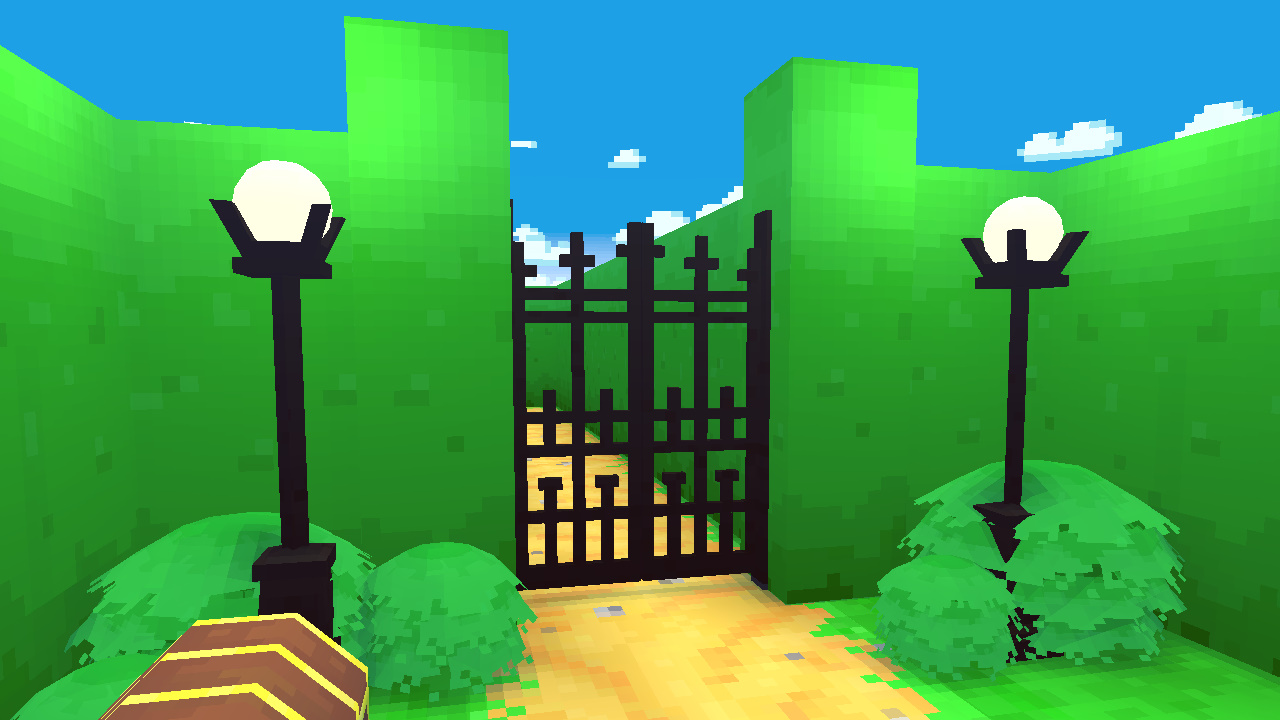

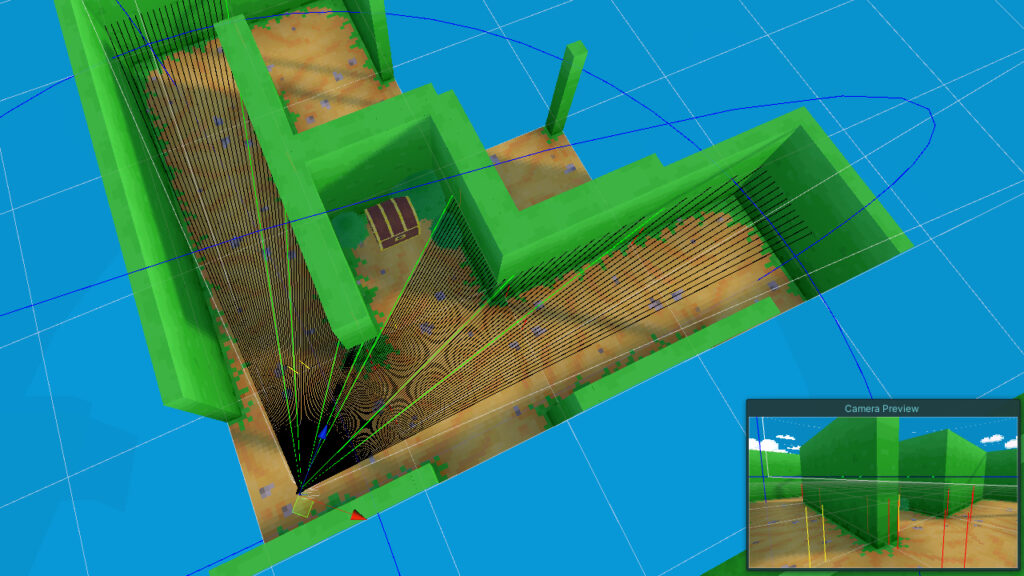

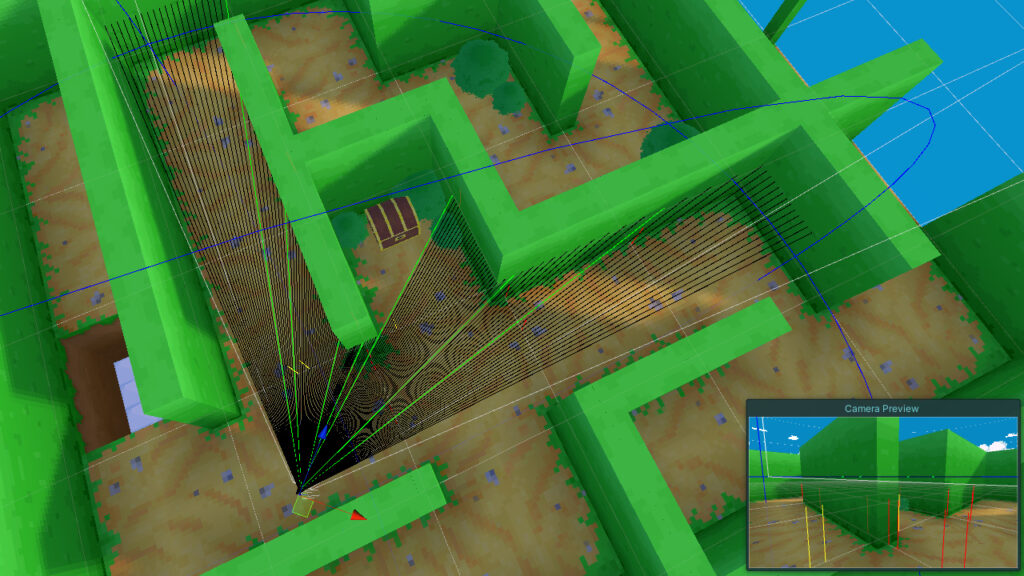

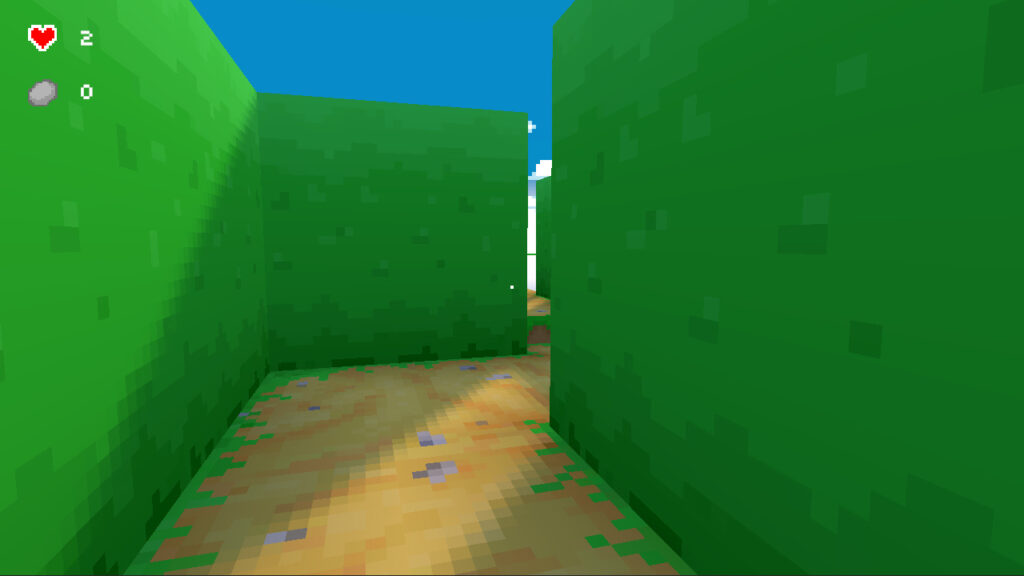

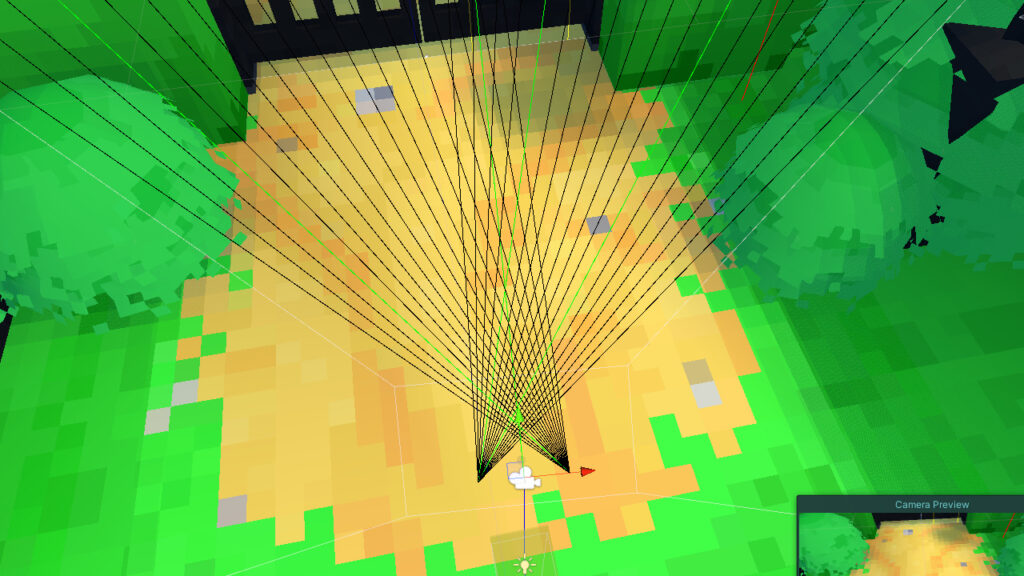

Last year I was working on a small project called “The Maze Where The Minotaur Lives” to create a Custom Scriptable Rendering Pipeline and learn more about Shaders and Rendering in Unity.

And on and off for the last year, I’ve been taking what I’ve learned to create another Custom SRP to refine my understanding and address issues I encountered during that project.

One of those issues was Shader Variants.

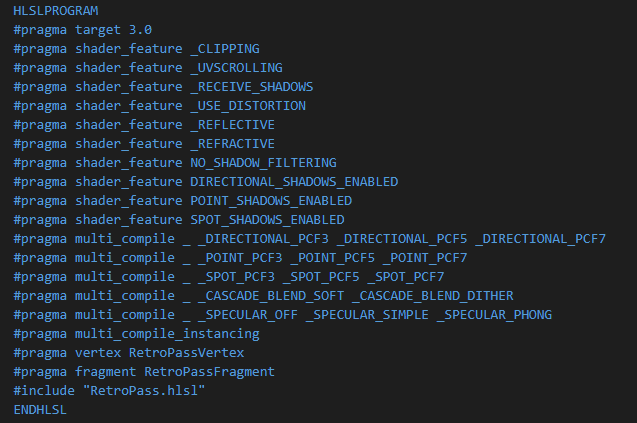

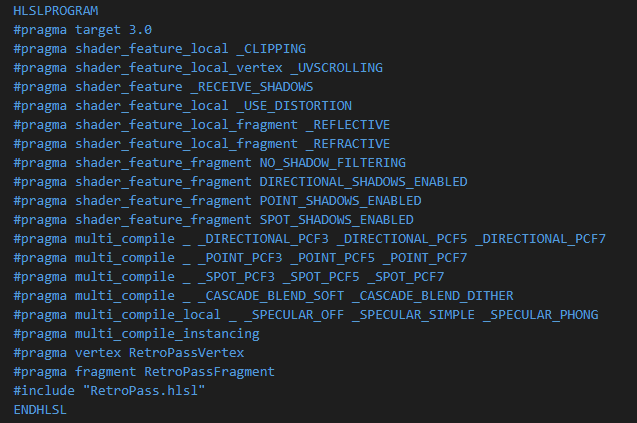

Unity’s Shader compiler automates the creation of every possible shader variant. For every keyword used in a “shader_feature” and “multi_compile” pragma, it generates a variation based on combinations of other shader features and multi-compile keywords.

This is great since it means you don’t have to manually create these variations yourself, which is very time-consuming. But what it does mean is that Unity will automatically create EVERY possible shader variant, whether they are used or not, which is very time-consuming. >: (

This leads to extremely long build times.

This isn’t too bad, since Unity will cache the shader compiler results during the first build so that it can be used in subsequent builds. But, when you’re working on a project where shader changes are frequent, it doesn’t help much. Requiring shaders to recompile, every single time.

What’s worse is Unity will bundle all the unused shader variants with your build, whether they are used or not, adding unnecessary bloat to your final build.

The initial build times I was experiencing on The Maze Where The Minotaur Lives were 2 hours+, creating 120,000+ shader variants for a single diffuse shader. It may have been more since it was some time ago.

Two hours is not something to be proud of and every small change to a shader meant torment.

At University (in 2007), when I was learning about animation, rendering, and compositing for Film. I would often hear the bleeding edge students gloating about having 3-4 hour render times for a single frame in 3DS Max.

They would turn on all the settings, Global Illumination, Ray Traced lighting, and anything else that online tutorials said would make it look pretty.

I never understood that.

Incidentally, my render times were 4 minutes per frame and looked as bad as theirs.

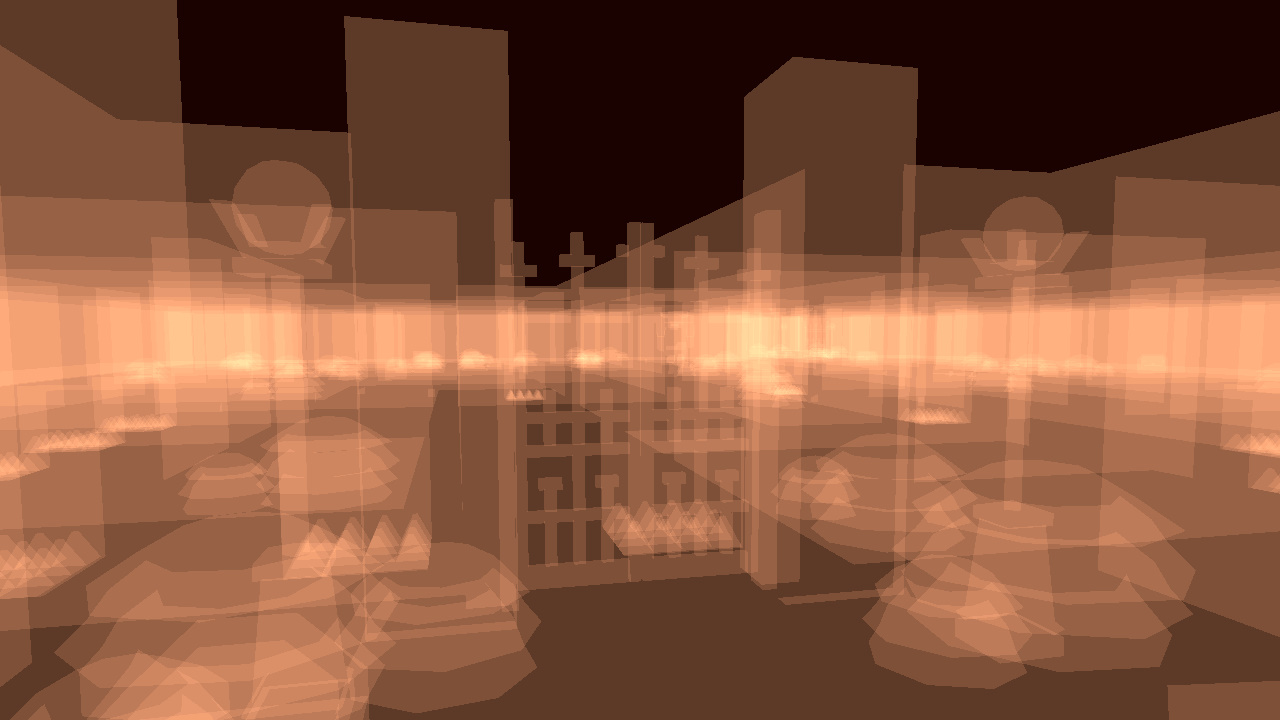

As an experiment, I wanted to see how long it would take to compile all the shaders when I removed a few optimizations.

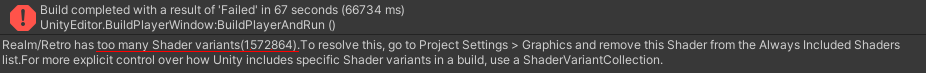

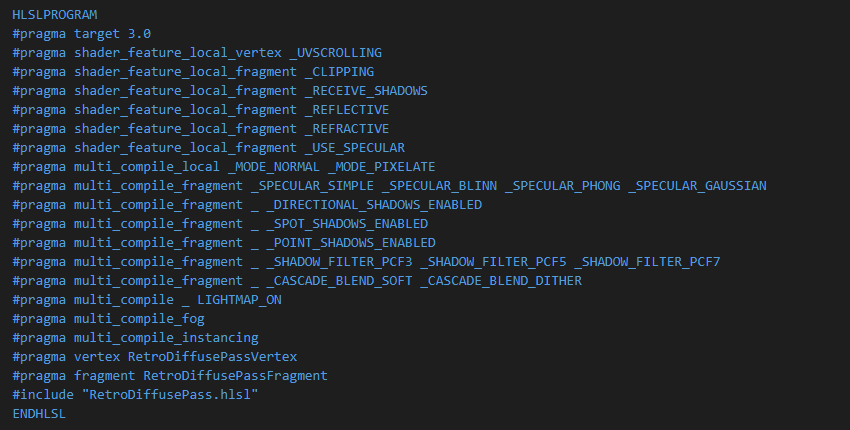

And this is what it looks like trying to build it.

To get it working again, I used the “vertex” and “fragment” suffixes to help narrow down which part of the shader the keywords should compile in. I also used the “local” suffix, to ensure that certain keywords only took place within their own shader, and not in combination with other shaders.

And this is what the build looks like now.

It’s an improvement, but it would take hours to build and it also introduces many variants that will never be used. And for Android builds, these numbers double.

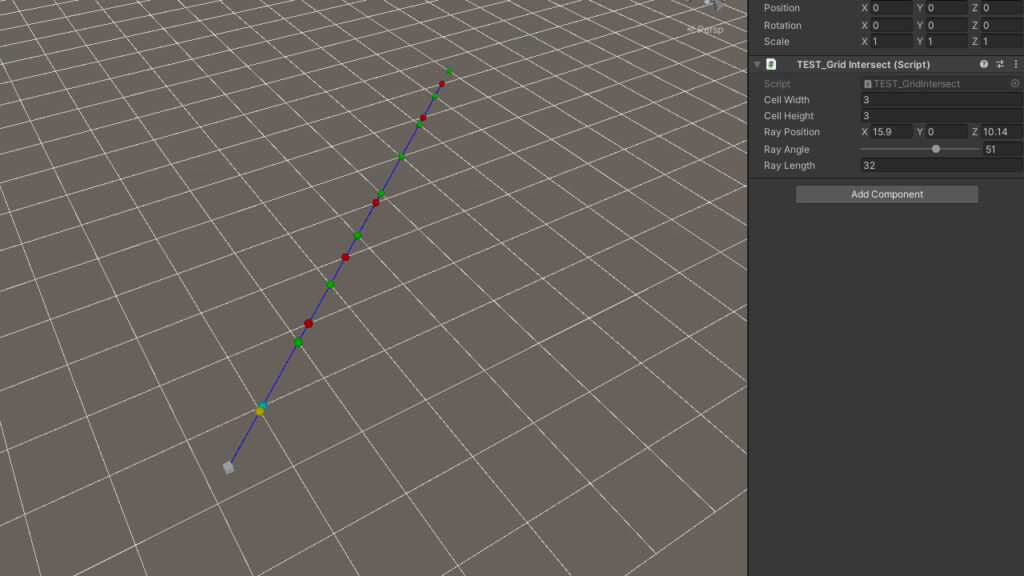

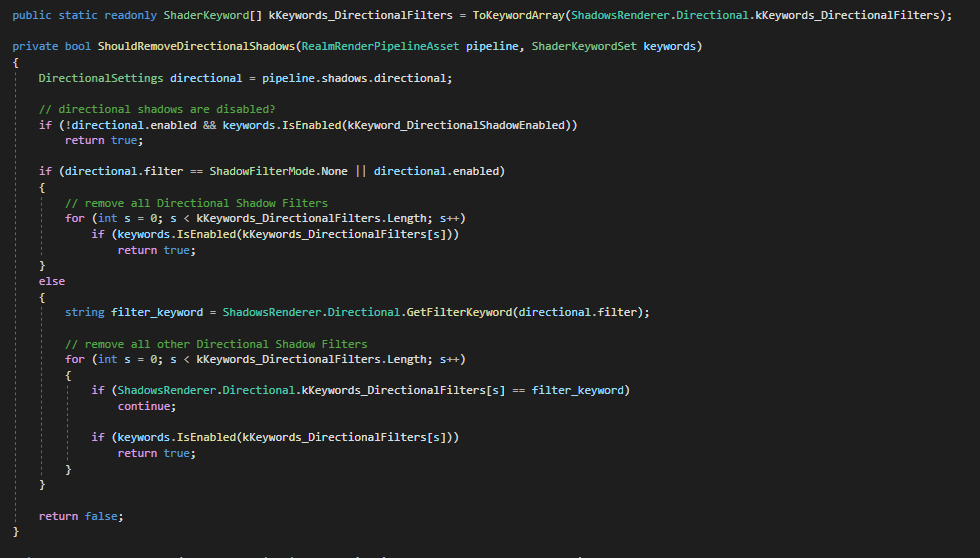

To optimize and strip this further, I wrote a preprocess that uses the IPreprocessShaders interface and used the rendering pipeline settings to help determine what shader variants could safely be left out.

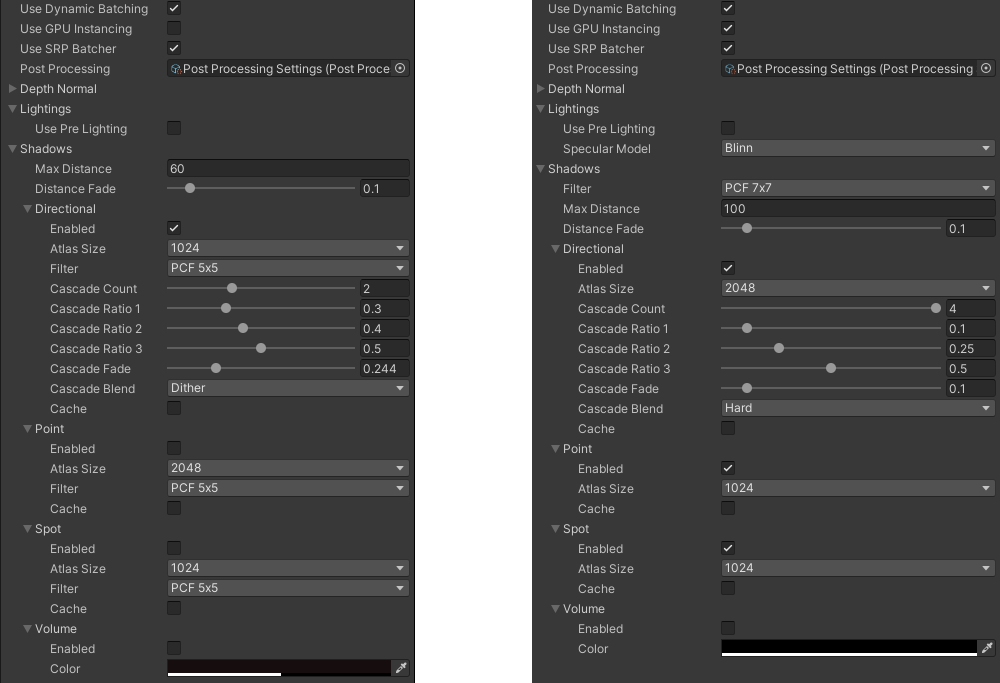

This is what the builds look like now.

The stripping process can take some time depending on the number of variants, taking up to 10 minutes+ for some shaders. But it’s a huge reduction in build time, from hours to minutes. It also leaves out the shaders combinations that will never be used.

But when working on Shaders, 10 minutes is still a lot of time to test small changes.

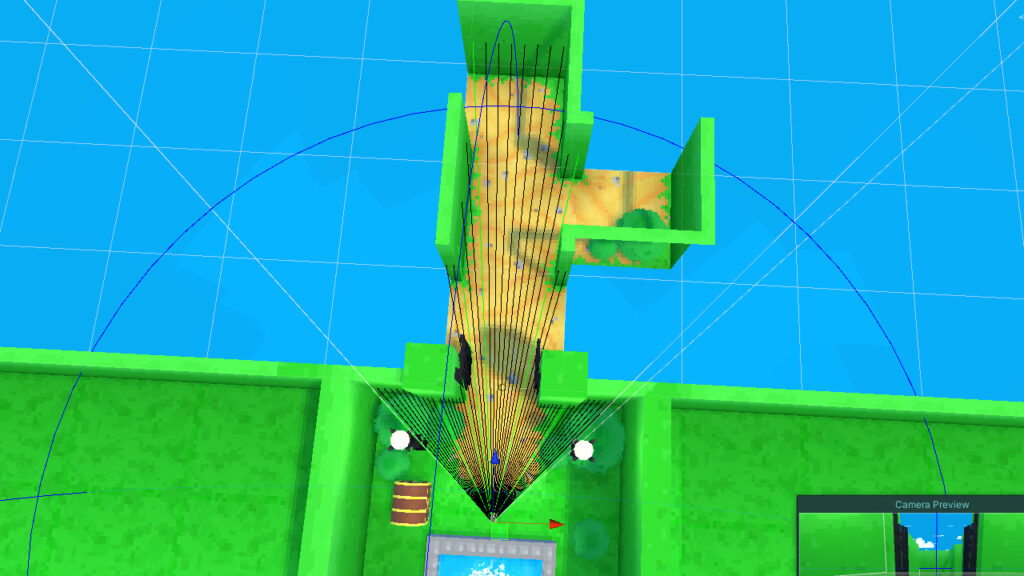

So in the new Retro Rendering Pipeline, I wanted to improve it, focusing on removing unnecessary features and simplifying the Material and Rendering pipeline GUI.

The biggest change I made was combining all the Light’s Shadow Filtering into a single option for all Light types, which reduces the number of shader variants significantly. I also moved the Specular lighting model to be global instead of per material, simplifying the material interface to have a simple “Use Specular” check box.

The new Retro Diffuse shader during build time (without stripping) generates 42,000+ Fragment Variants, taking 1.5+ hours to compile. With the variant stripping preprocess, the number is down to 512, taking less than 5 minutes to compile.

The reduction in build time is very welcome.

If you’d like to know more, the Unity Blog posted a great article, “Stripping scriptable shader variants“, covering shader stripping in-depth. It’s worth a read if you’re working with Shaders and/or Custom Rendering Pipelines.