I started adding lighting support to the little maze project that I’m using to learn Unity’s Scriptable Rendering Pipeline (SRP).

I’ve been following catlikecoding’s tutorials on SRP to help as a starting point and navigate some of the quirks, and see how lighting can be handled.

If you’re a Unity developer, check out catlikecoding. Their tutorials are great.

It took a few days to get through the ones I wanted to learn about. But once I got them working, I started to experiment.

I’ve always found a good way to learn anything is to follow the steps laid out, and then divert off and experiment. Giving yourself a chance to learn by making your own mistakes.

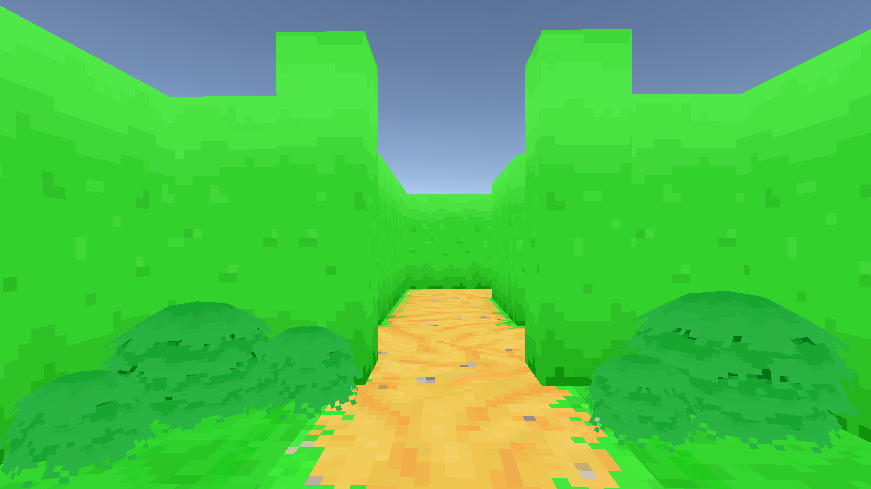

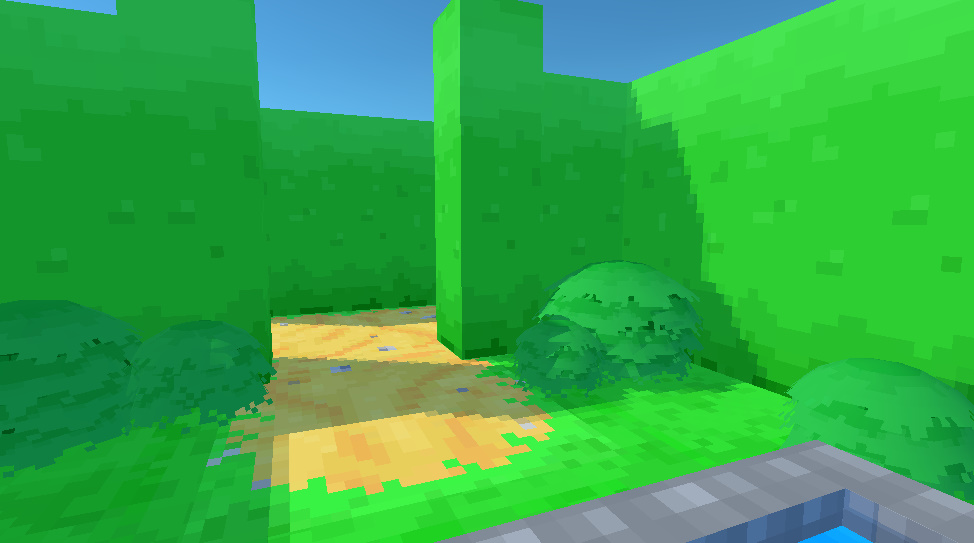

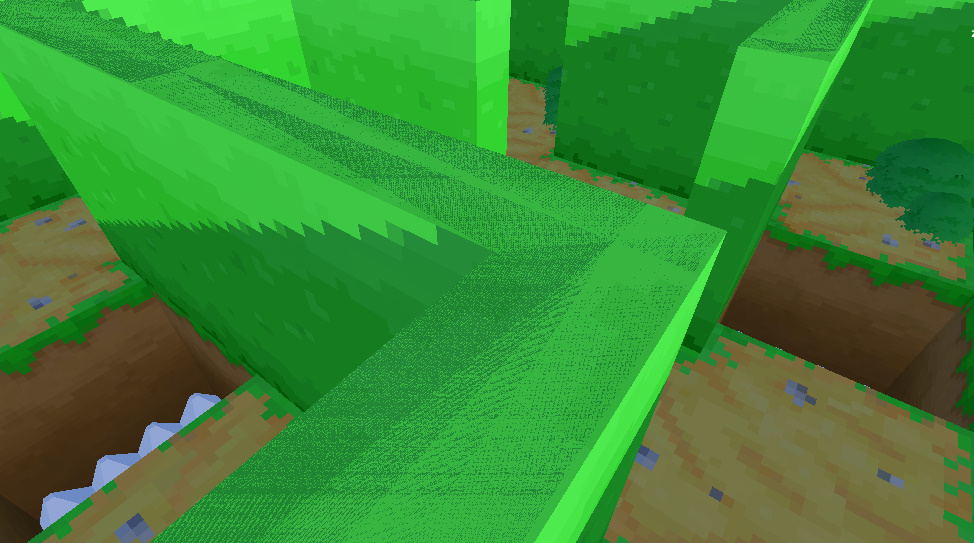

For the little maze, I wanted to see if I could maintain the pixel art aesthetic in the lighting. Trying to make shadows match the pixel art.

It still needs some work but I’m happy with how it turned out.

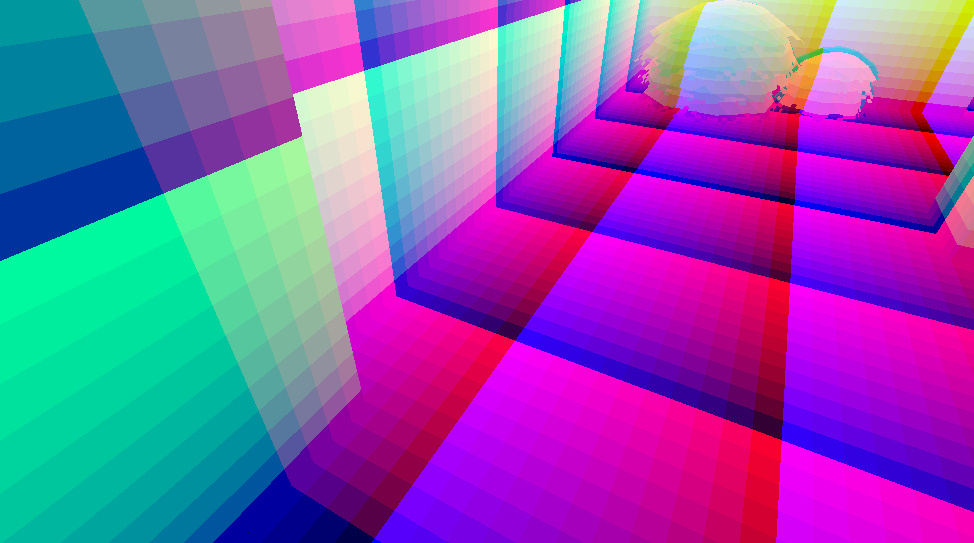

So how’s it done?

I take the world-space position of the pixel on the screen and convert it into a world-space pixel position, which is then used by the lighting functions to calculate the shadow.

Below is a little code snippet of how it’s calculated on the shader.

half pixelSize = GetShadowPixel();

float3 pixel_pos = floor(input.worldPosition / pixelSize) * pixelSize;More can be done to calculate a better position, which I’ll return to once I’ve made more progress. But being able to manipulate values, and knowing how the code using it will be executed, always feels good. It feels less like a black box.

Though it wasn’t without problems.

There were issues with flickering, aligning shadows to the pixels, and light passing through seemingly solid meshes which took a bit of experimenting to solve.

There is room for improvement, but so far I’m happy with how it’s looking.

The lighting, shadows, and ambient color are driven by a day-night cycle component, which I rewrote from the old maze project.

Unity’s rendering pipeline feels a little less mysterious to me now. And I’m looking forward to exploring the limits of what I can do as I continue to work with it.

But next up, I’m going to dive a little deeper into supporting more lighting features. I’d like to add more light sources like a lantern or sconce to light up the maze at night. It should be fun.