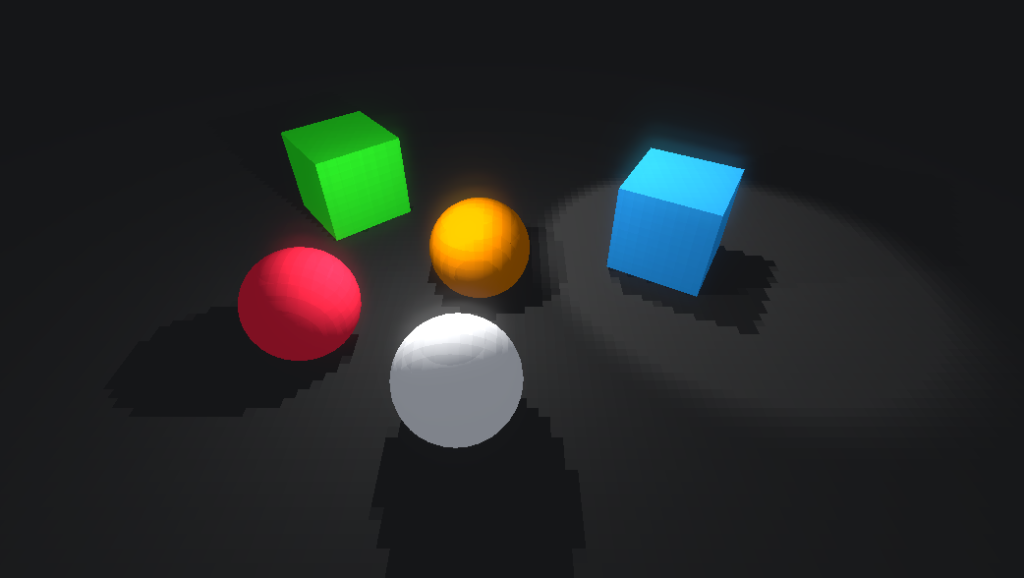

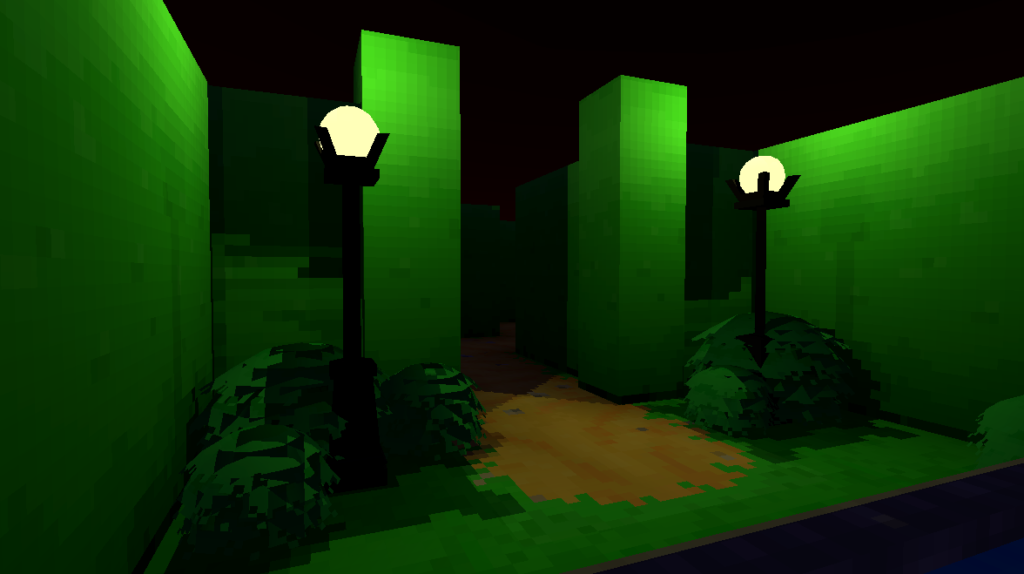

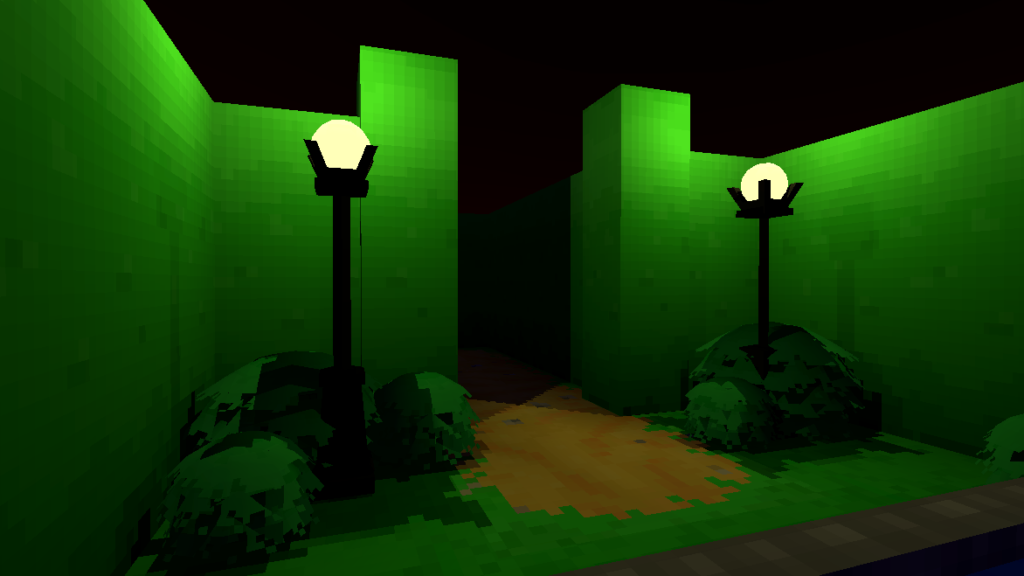

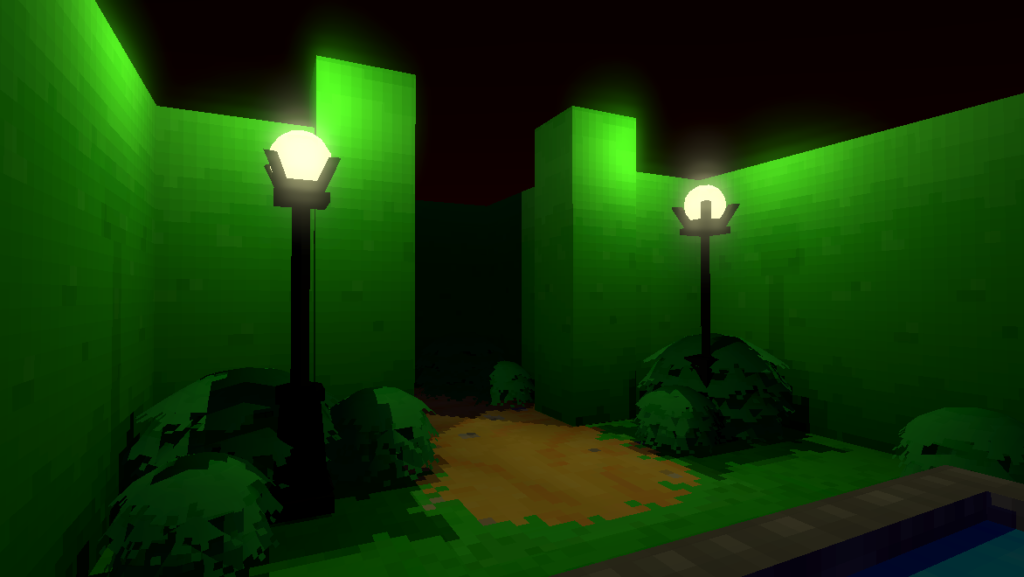

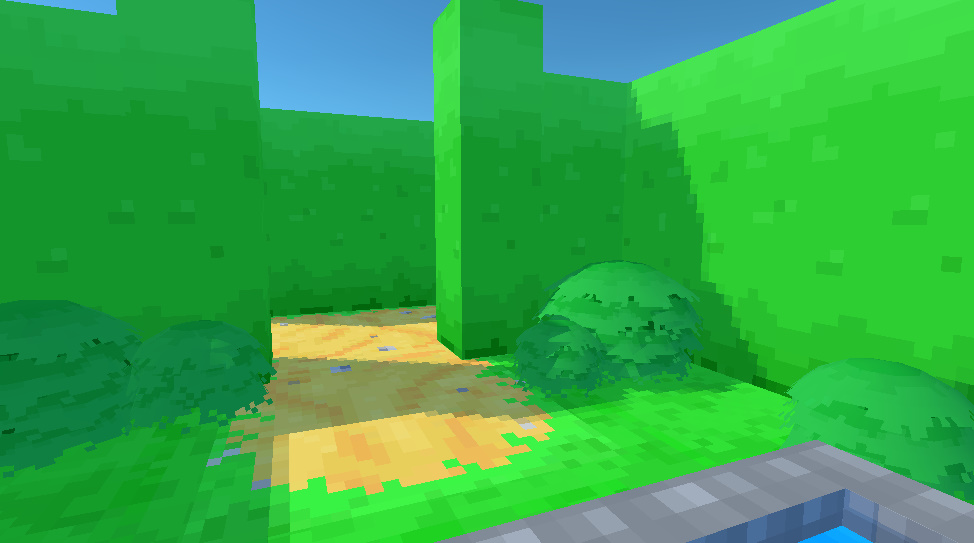

This past week I’ve been experimenting with Texture Generation to learn more about procedural generation and apply what I learn to The Maze Where The Minotaur Lives.

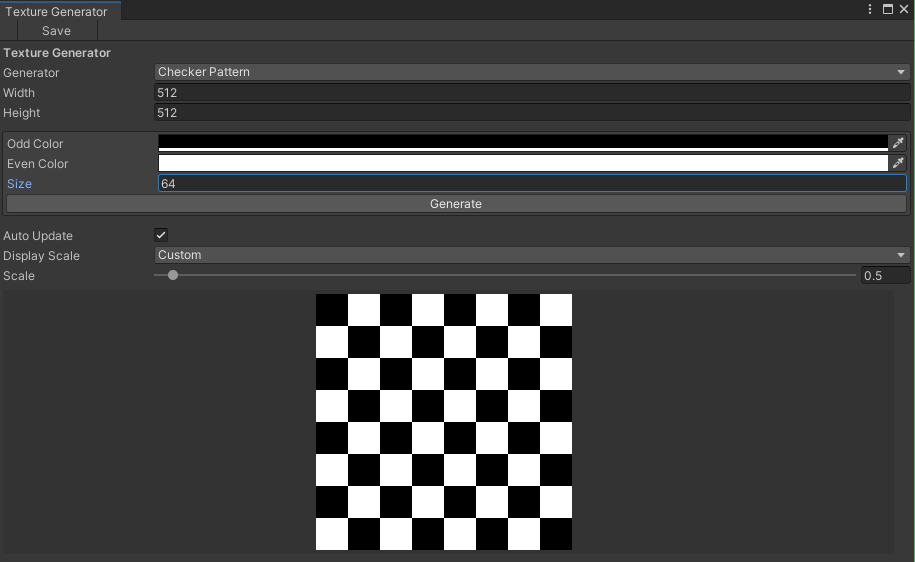

To start out, I created a simple editor window in Unity to let me create and test new Texture Generators easily, providing a simple way to adjust their values and see the results.

The first generator was a basic checker pattern. I wanted to figure out the basic interfaces needed to create the window editor and how to generate textures, before moving onto anything more complicated.

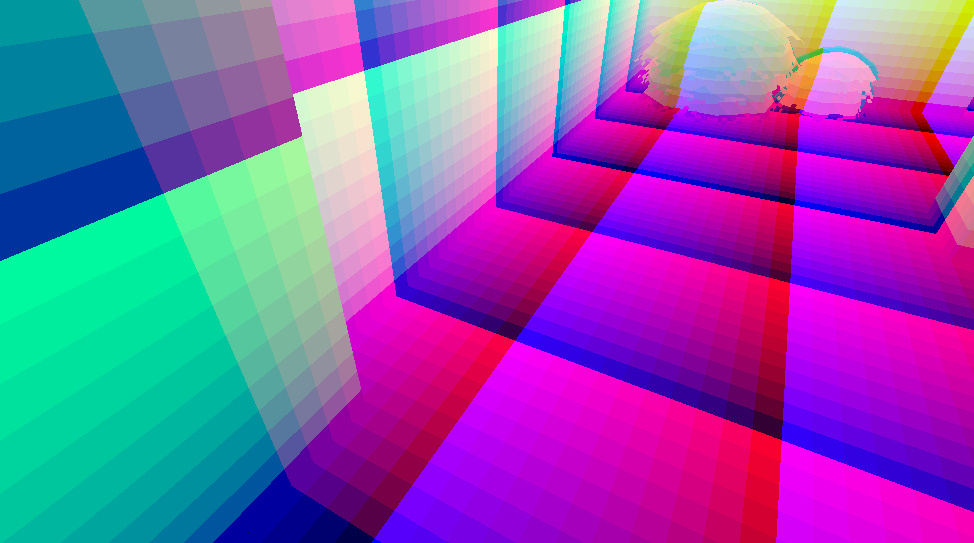

I then experimented with generating gradients, creating a UV gradient, and a diagonal color gradient.

Next, I tried a variety of noise algorithms. Using Unity’s Random class, to generate a random texture.

I also added support for Value and Perlin noise.

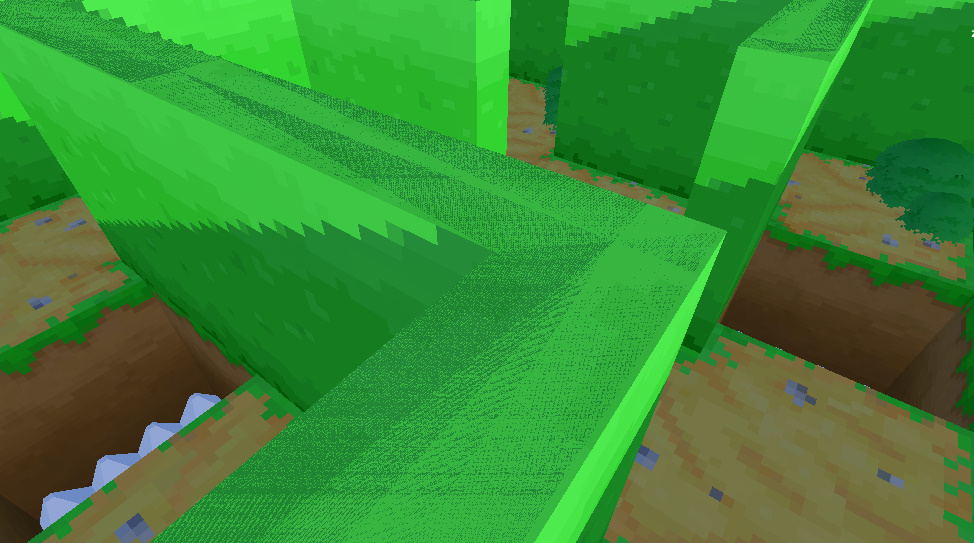

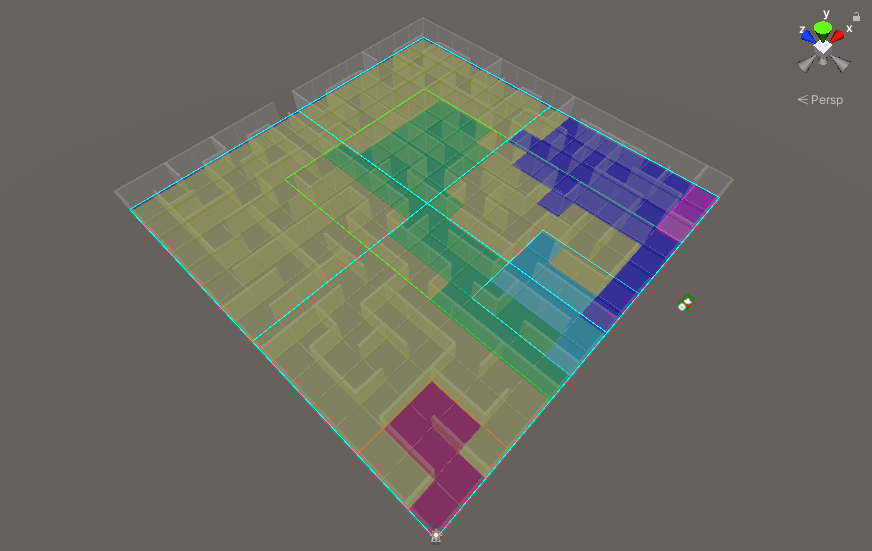

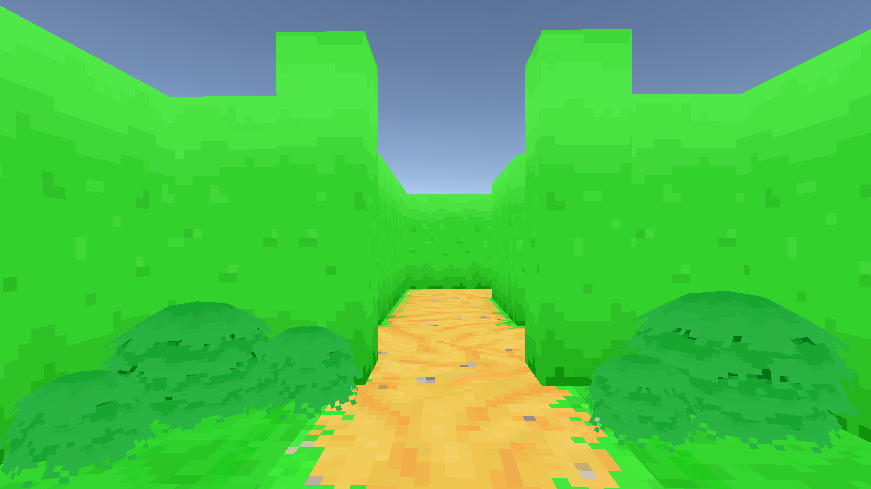

I’ll be using these textures to help generate and visualize variations that will be used in The Maze Where the Minotaur Lives for walls and other effects.

Improving Performance

When adjusting the settings for the Perlin noise, there is a delay between the slider being changed and when the texture is generated. This is even more noticeable when adjusting the noise’s octave to higher levels.

This isn’t too big of a problem while loading and generating a map in-game, especially when the maps are small. But I couldn’t help wonder if I could tweak the code to increase the performance by using Unity’s Job System in the editor.

I have dabbled with it in the past, but I’ve never found much use for it, at least until now.

My initial attempt was to take the code and put it inside a “Job”, to see what would happen. Surprisingly, it ran slower. My best guess is that moving work to another thread and waiting for it to complete is just that, moving it somewhere else to wait for it.

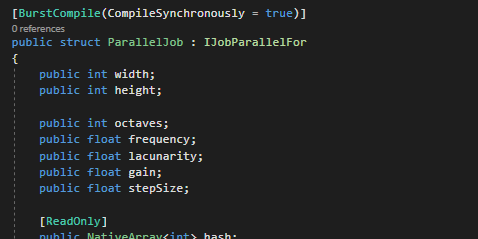

I then converted the code to use an “IJobParallelFor” instead. Parallel jobs can be used to schedule batches to run the same job across multiple processes. And since the noise is calculated per pixel, it’s safe to generate the noise using batches.

There was a decent improvement in the GUI, with less delay when generating a 512×512 texture.

Even with some minor tweaks to the code and number of batches, the changes in performance were minor, with still noticeable delays in the GUI.

So I decided to take it one step further, and try out Unity’s Burst compiler.

I’ve heard and seen a few examples of it in action, and it looks a lot like black magic. So this felt like a good opportunity to try it out myself.

Adding one line at the top of the job struct to use the Burst compiler with the job, the performance gain was incredible. The GUI now has no delays at all when adjusting the parameters for a 512×512 noise texture.

With a 1024×1024 texture, the Burst version has similar responsiveness as the CPU-bound version. With the CPU-bound version at 1024×1024 giving you enough time to make a coffee and come back.

Next, I’ll be updating the procedural framework to make use of these textures. I’m not entirely sure how long that will take with my current workload, but I’m looking forward to putting these to use and seeing what kinds of results I can get.