This post was original written on January 9th, 2023. Better late then never, right?

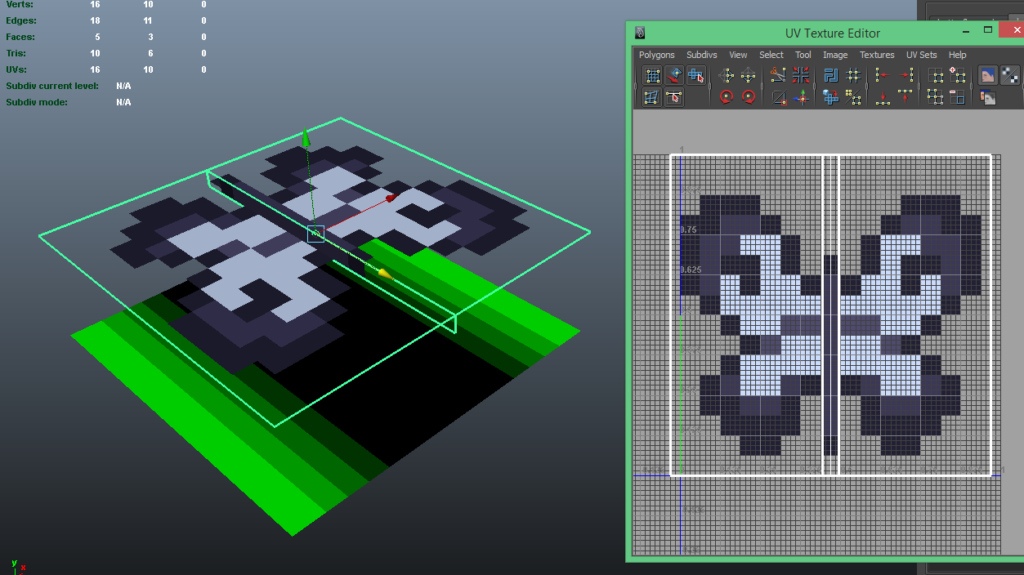

After many years, I’m finally moving away from Maya and starting to learn Blender.

Why the change?

Honestly, it was about time to change. Maya 2013 has served me well in a lot of projects, but it isn’t getting any more stable (it still crashes a lot).

In recent months, I’ve been working on character rigging, moving into learning more advanced techniques like facial rigging and blend shapes. I could learn it in Maya 2013, try and dig up some old tutorials and go from there, or, I could learn how to do it in Blender and in doing so, also learn new methods and workflows.

After Maya was in a particularly crashing mood, I figured it was about time I started learning Blender.

Why not subscribe to Maya LT instead?

I did for a while when my budget wasn’t so tight. Getting access to the new tools and improvements was incredibly useful. At least until I could no longer afford it.

But that wasn’t the real problem. Losing complete access to my working files was.

I ended up having to get the FBX model files I exported into Unity and import them into my old version of Maya. For the files I could recover, it required a substantial amount of work to get everything in working order again.

That experience is why I prefer not to subscribe to things anymore, especially to software with proprietary closed-source files. Autodesk has discontinued the subscription to Maya LT, so there’s that too.

So why didn’t I use Blender sooner then?

Blender wasn’t always as intuitive as it is today (and in some ways, it still isn’t) for newcomers.

I remember attempting in previous versions of Blender and it just wasn’t very clear without being some kind of master shortcut pianist.

Following tutorials on Blender on YouTube wasn’t all that helpful either, with significant differences between versions over the years.

It was also hard to find a tutorial that wasn’t glossing over the finer details and wasn’t assuming a lot of things about what you knew. Like things were obvious to them, but not to you.

I’ve also come across a few that jumped straight into installing X plug-ins because of “reasons”, which doesn’t really help a newcomer learn Blender.

For me, I like to get to know a tool before I start optimizing my workflow with a new plug-in or a “recommended” workflow. It gives me a chance to know what’s really available, especially as I learn more about the software.

Plus if someone’s recommended plug-in no longer works, or no longer exists, you’re going to have a hard time figuring out how to do it without it. So you might as well not bother with the fancy plug-ins until you do.

Granted that all these little incidents happened in prior versions over the last decade, and having failed to find adequate tutelage, I was only finding reasons not to learn Blender. Especially when I was proficient in Maya, and had automated a substantial portion of my workflow.

Also, setting the configuration to “Industry Standard” in Blender (which I always did) was a surefire way of just making it impossible to learn and missing out on everything that makes Blender useable.

Long story short, nothing was helping me make a clear decision to commit to learning Blender. At least until now.

How am I learning Blender now?

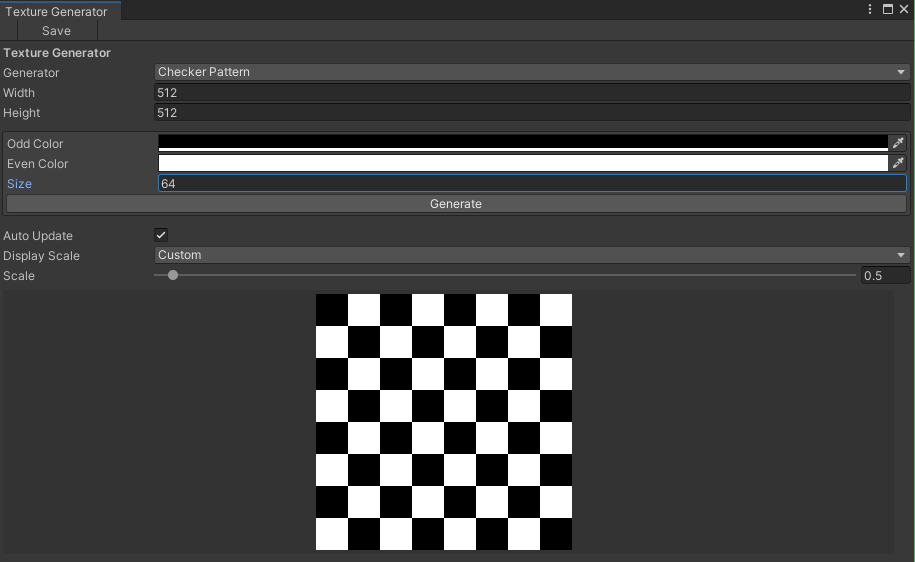

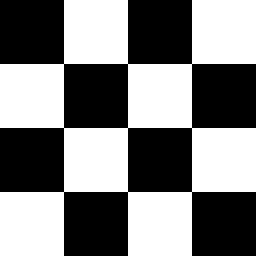

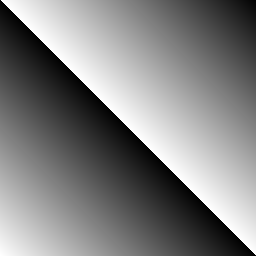

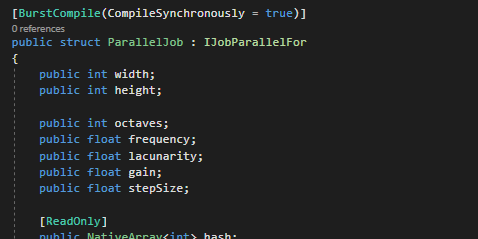

I’m taking a course provided by GameDev.tv, Complete Blender Creator 3.2: Learn 3D Modelling for Beginners, which has been taking me through the basics of Blender and teaching me about many of the hidden shortcuts and features that I would never have known about, or known to search for.

I’m also not alone as I’m learning. I’m working with my partner and a friend too, who are going through the same course. Both of which have had previous Maya experiences.

Having someone to work through it while you are has been encouraging, especially when they’re are shared moments of “why the hell didn’t someone tell me about X?!” or telling each other about stuff we’ve found as we’ve been experimenting.

And so far?

So far, it’s been enjoyable, reminding me of the first time I opened Lightwave and 3DS Max when I started to learn how to model in those applications.

Learning Blender a small piece at a time, and really understanding how shortcuts are actually used, and why things are configured the way they are, has really helped in understanding Blender. It has also highlighted just how much I’ve missed out on, especially the useful features that really aid in the creative workflow.

So overall, daunting, but great.

My hopes are that I’ll eventually get back to the same level of automation and proficiency I have in Maya, which is going to take a while.

But until then, I’ll just have to keep at it.